Table of Contents

Understanding What PMF Validation Actually Looks Like

Let's be candid: product-market fit validation is one of the most misunderstood concepts in the software world. It's often mistaken for getting positive feedback or signing up a handful of enthusiastic early adopters. While those are great starting points, they are not, by themselves, proof that you have a sustainable business. True validation is about finding a strong signal in a noisy market—a signal that your product isn't just a "nice-to-have," but a "must-have" for a specific, sizable group of people.

Many teams fall into the trap of confirmation bias. They hear what they want to hear from a few friendly customers and declare victory. I’ve seen this firsthand. A team might build a feature, get five customers to say they love it, and assume they’ve found fit. But what they often miss is the context: Are these users paying? Would they be genuinely upset if the product disappeared tomorrow? Are they recommending it to others without being prompted? These are the tougher questions that separate early traction from genuine product-market fit validation.

Enthusiastic Early Adopters vs. True Market Fit

The difference between a small, passionate user base and real market fit is stark. Early adopters are often visionaries who love trying new things. They are forgiving of bugs and incomplete features because they are excited by the potential. While their feedback is invaluable, their enthusiasm can create a false positive.

True market fit, on the other hand, is less about passion and more about necessity. It’s when your product becomes embedded in a customer's workflow. They don’t just like it; they rely on it. This reliance is the acid test. If you're looking for a comprehensive guide to finding product-market fit, especially for B2B startup founders, this resource offers some great insights.

Here’s how to spot the difference:

- Pull vs. Push: Are you constantly pushing to get users engaged, or are they pulling the product into their lives organically? High organic growth is a strong indicator of pull.

- Feedback Focus: Early adopters give feedback on what your product could be. Users in a market with fit give feedback on how to make the product they already depend on even better.

- Payment Behavior: Early adopters might use a free version forever. A true market fit is validated when a significant portion of users willingly pays because the value exceeds the cost.

The Critical Role of Timing and Market Readiness

A brilliant product launched at the wrong time is just a brilliant failure. Market readiness is a factor that many product leaders underestimate. You could have the perfect solution, but if the market isn't experiencing the problem you solve with enough pain, or if the necessary infrastructure isn't in place, you won't get traction. Think of all the video streaming services that failed in the early 2000s before high-speed internet was widespread.

Statistically, the importance of this can't be overstated. While over 90% of startups fail, among those that succeed, around 70% credit finding PMF early as a key factor in their survival. This shows that validation isn't a "nice-to-do" task; it's the core mission. You can find more details on why PMF is a decisive factor for startup success. Understanding your market’s current state is just as important as understanding your product’s features.

Building a Validation Framework That Delivers Results

Moving from the idea of market fit to the reality of confirming it requires a system. Without a structured approach, you end up chasing vanity metrics and getting lost in noisy feedback. A solid product-market fit validation process isn't just a checklist you run through once; it’s a repeatable engine for generating real insights. It’s how you separate wishful thinking from genuine market demand. Successful teams don't just ask, "Do people like this?" They build a system to answer, "Which specific people need this, how badly, and is that group large enough to build a business on?"

A great way to begin is by swapping generic assumptions for specific, testable hypotheses. This usually starts with identifying an incredibly narrow customer segment. Instead of aiming for "small businesses," you might focus on "U.S.-based plumbing companies with 5-10 employees that don't yet use scheduling software." This specificity is your superpower. It lets you design relevant experiments and collect clean, actionable data instead of vague, generalized opinions.

Identifying and Scoring Customer Segments

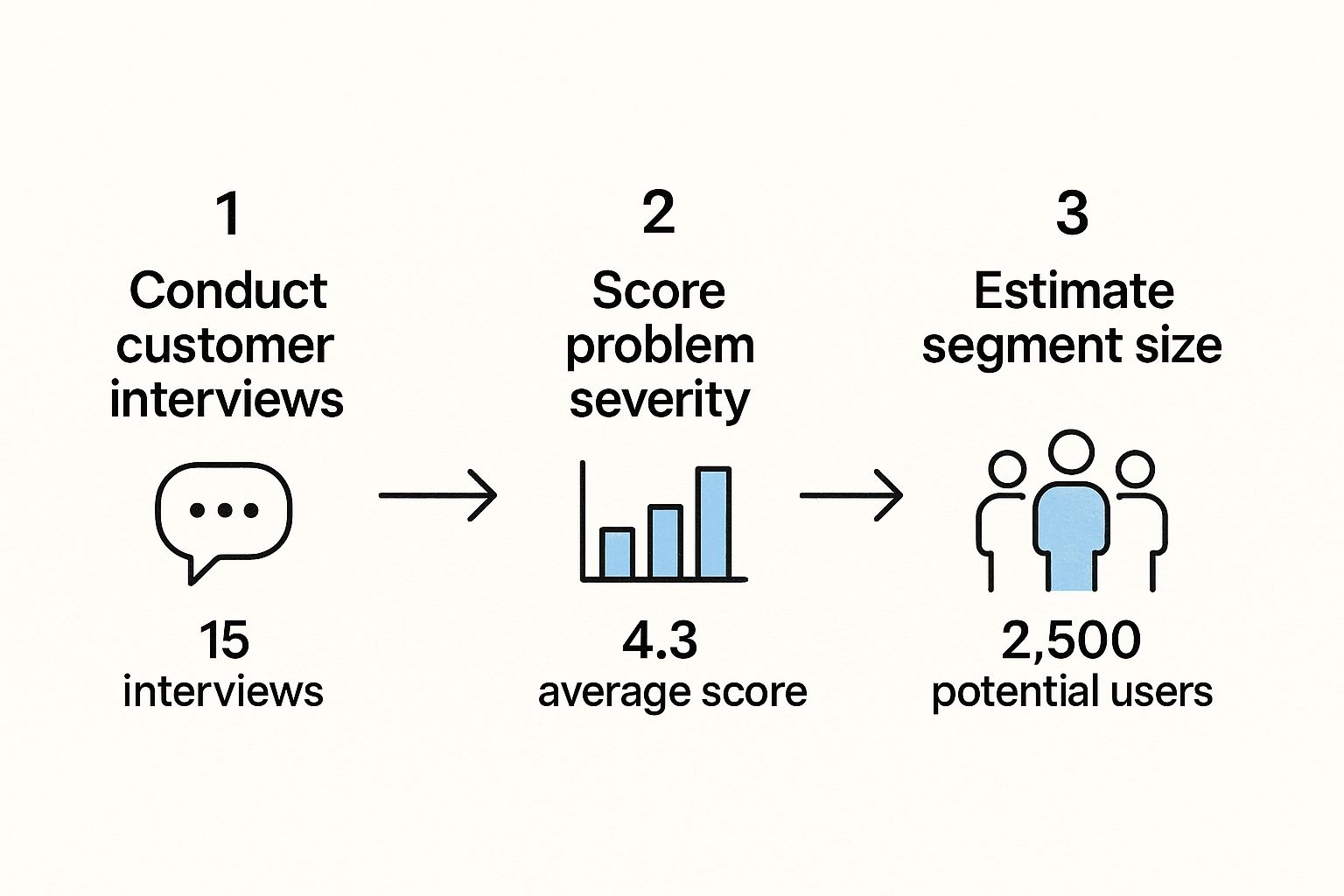

The first part of any reliable framework is figuring out who your customer is—and just as importantly, who they are not. Once you’ve narrowed down an initial target segment, your next move is to qualify their pain. Are you solving a minor annoyance or a five-alarm fire? To find out, you need to talk to real people. Forget about large-scale surveys for now; start with 15-20 in-depth customer interviews.

During these conversations, your job is to listen, not to sell. You’re searching for patterns in their problems, workflows, and frustrations. A powerful technique is to score the problem's severity on a scale of 1 to 10. A problem scoring an 8 or higher is often a "hair on fire" issue they are actively trying to fix. Problems scoring below a 5 are usually "nice-to-haves" that won't drive real adoption. With this qualitative data in hand, you can then estimate the size of that high-pain segment to see if it’s a market worth pursuing.

This visual shows a simple flow for this initial validation: conduct interviews, score the problem, and then estimate the market segment size.

The main takeaway here is the sequence: deep qualitative understanding must come before quantitative scaling. This ensures you’re not just building something, but building something people desperately need.

To help you choose the right approach for your team, here's a look at a few common validation frameworks. Each has its own strengths depending on your stage and resources.

| Framework Type | Best For | Time Investment | Key Metrics | Success Rate |

|---|---|---|---|---|

| Customer Interviews | Early-stage problem discovery and validating pain points. | Low (Weeks) | Problem Severity Score, Qualitative Feedback Patterns | High for insight, variable for prediction |

| Concierge MVP | Testing a service-based solution before building any software. | Medium (Weeks to Months) | Willingness to Pay, Customer Retention, Manual Effort Score | High for service validation, moderate for scalability |

| Wizard of Oz MVP | Simulating a complex automated product with manual backend work. | Medium (Weeks to Months) | User Engagement, Task Completion Rate, Perceived Value | High for UX testing, moderate for technical feasibility |

| Lean Startup BML Cycle | Iterating on a live product with a small user base. | High (Ongoing) | Cohort Retention, Net Promoter Score (NPS), Feature Adoption | High for optimization, requires an existing product |

These frameworks aren't mutually exclusive. Many successful products start with interviews, evolve into a Concierge or Wizard of Oz MVP to test the solution, and then adopt the Build-Measure-Learn cycle for long-term growth. The goal is to progressively de-risk your assumptions at each stage.

From Feedback Loops to Scalable Models

With a validated high-pain segment, your framework needs to evolve. The focus shifts to creating tight feedback loops that connect your product development directly to user needs. This is a core idea in many successful methodologies, including those found in the lean startup approach. It's all about building, measuring, and learning in rapid cycles.

The evolution of these validation methods has led to structured maturity frameworks, now widely used by startup accelerators. These models often define product-market fit validation as a journey through multiple stages—from initial problem discovery to measurable customer satisfaction and, finally, scalable growth. It’s a methodical process of de-risking your venture every step of the way. You can explore how these are structured and learn more about this multi-stage PMF validation model to see how it might apply to your product. The ultimate goal is to build a system that not only confirms your initial fit but also helps you keep it as your product and market change over time.

Mastering The Sean Ellis Test and Quantitative Methods

While customer interviews give you the rich "why" behind user behavior, you eventually need to answer "what" and "how many." This is where you trade anecdotal stories for hard data, and one of the most direct tools in your arsenal is the Sean Ellis Test. It’s a beautifully simple way to get a clear, numerical signal for product-market fit validation, cutting through the noise with one powerful question.

Created in the early 2010s, this test asks users a single, crucial question: "How would you feel if you could no longer use this product?" The real insight comes from the responses. The benchmark Sean Ellis discovered is that if at least 40% of your users say they would be "very disappointed," you're likely onto something big. It’s a simple yet effective litmus test, and you can learn more about how this complete validation framework is still used today.

Implementing and Interpreting the Test

Just asking the question isn't enough; who and how you ask is critical. To get a clean signal, you need to survey users who have genuinely experienced your product's core value. I always recommend focusing on people who have:

- Used your product recently, say within the last two weeks.

- Engaged with the main features at least a couple of times.

Don’t send this survey to brand-new signups or users who've already churned. Your goal is to gauge the sentiment of your active user base. Once the results are in, the real work starts. Hitting that 40% benchmark is a great sign, but the most valuable insights are hidden in the segments.

Dive deep into the "very disappointed" group. Who are they? What job titles do they have? What features do they use most? This group represents your ideal customer profile—these are the people you need to find more of. On the flip side, the "not disappointed" group is just as important. Analyzing them tells you who your product isn't for, helping you refine your marketing and stop wasting budget on the wrong audience. This is a core part of the customer development journey—a continuous loop of learning and refining.

Beyond the Test: Essential Quantitative Metrics

The Sean Ellis Test gives you a fantastic snapshot, but a full picture of product-market fit validation requires tracking what users do, not just what they say. You need ongoing metrics that tell a story about retention, engagement, and sustainable growth. These are the numbers that prove your product has staying power.

To give you a clearer idea, here are some of the most important metrics to track. The table below outlines what good looks like, how to measure it, and what warning signs to watch for.

| Metric | Good Benchmark | Measurement Method | Frequency | Warning Signs |

|---|---|---|---|---|

| Cohort Retention | Flat or smiling curve after 3-6 months | Track user cohorts by sign-up month | Monthly | Steep, continuous drop-off after the first month |

| Net Promoter Score (NPS) | 30+ for SaaS; 50+ is excellent | In-app or email survey using a tool like Hotjar | Quarterly | Negative or single-digit scores; high number of Passives |

| Feature Adoption Rate | >25% for key features within first 90 days | Product analytics tools like Mixpanel | Monthly | Key features are ignored; usage concentrated on one minor feature |

| Organic Growth Rate | >15% month-over-month from non-paid channels | Analytics (traffic, sign-ups by source) | Monthly | Stagnant growth without paid marketing spend |

| Customer Lifetime Value (CLV) | CLV > 3x Customer Acquisition Cost (CAC) | (Avg. Revenue per User) / (Churn Rate) | Quarterly | CLV is less than CAC; decreasing CLV over time |

Combining a powerful leading indicator like the Sean Ellis Test with these ongoing behavioral metrics creates a solid system for product-market fit validation.

This data-driven approach takes the guesswork out of the equation. It shows you whether your product truly connects with the market and has built a foundation for real, sustainable growth. It's the evidence you need to confidently double down on what's working or make the tough but necessary call to pivot.

Designing Experiments That Generate Reliable Insights

Having a solid framework and your key metrics lined up is a great starting point, but the real test for product-market fit validation is the quality of your experiments. Anyone can run a test, but designing one that gives you trustworthy, actionable insights? That’s an art form. A poorly designed experiment can give you a false positive, tricking you into pouring resources into a product the market won't actually support.

The objective is to get past simple surveys and create situations that show you what users actually do, not just what they say they’ll do. This means building tests that reveal genuine intent and behavior, focusing on actions over opinions.

Creating Meaningful Hypothesis Frameworks

Before you test anything, you need a clear hypothesis. A strong hypothesis isn’t just a wild guess; it’s a specific, testable statement that links a product change to a real user behavior. A weak hypothesis sounds like, "Users will like the new dashboard." That tells you nothing.

A strong one is much sharper: "By adding a one-click report generation feature for financial analysts, we will see a 20% increase in weekly active users within that group because it saves them a ton of time."

This structure forces you to think through the details:

- Identify the target user: Who, specifically, is this for?

- Define the change: What are you actually building or testing?

- Predict a measurable outcome: What number will prove you're right?

- State the user value: Why do you believe this change will work?

This approach turns a vague idea into a focused, scientific process. It makes sure every experiment has a clear purpose and a defined finish line.

Balancing Speed with Accuracy in Validation

In the race to find PMF, it's tempting to cut corners and trade accuracy for speed. This is a dangerous move. While fast feedback is great, it’s useless if it isn’t reliable. A common mistake is testing with the wrong people. Getting rave reviews from your friendly, forgiving early adopters for a feature meant for mainstream users will give you skewed, overly optimistic results.

Think about the early days of Figma. Before their public launch, the team ran a long private beta. They could have rushed to market, but instead, they carefully gathered data from a specific group of designers.

This screenshot shows the collaborative heart of their platform, a core idea they absolutely had to get right. By watching real design teams use these features in their day-to-day work during the beta, Figma got high-quality insights, not just shallow opinions.

This gets to a core principle: the reliability of your insights is directly linked to how closely your test environment mirrors the real world. To get results you can count on, you need to understand how to start creating digital content that converts and drives the actions you need to validate your assumptions. For example, instead of just asking if users would pay for a new feature, run a "painted door" test. This involves setting up a pre-order landing page and seeing how many people actually pull out their credit card. This behavioral evidence is far more powerful than a simple "yes" in a survey.

It’s this commitment to designing rigorous, behavior-focused experiments that separates teams that think they have PMF from those that know they do.

Recognizing True PMF Versus Early Traction

One of the most dangerous places for a software leader is the gray area between initial excitement and sustainable market demand. It’s easy to mistake the applause from a handful of enthusiastic users for genuine product-market fit. This premature celebration often leads companies down the wrong path, scaling a product that only a niche, forgiving audience truly loves. True product-market fit validation isn’t about finding fans; it's about proving necessity.

Early traction often feels fantastic. You get positive feedback, see some initial sign-ups, and maybe even a few glowing social media posts. The problem is that this "traction" can be misleading. It might come from your personal network, tech enthusiasts who love anything new, or users who like the idea of your product but don't actually need it for their daily workflow. A CB Insights report highlights a stark reality: 35% of startups fail because there is no market need, a clear sign that many misread these early signals.

Interpreting Mixed Signals in Your Data

So how do you separate the signal from the noise? The key is to look at the behavior behind the numbers. A surge in sign-ups is great, but what does the retention curve look like for those new users? If they disappear after a week, you have a leaky bucket, not product-market fit. This is where you must be brutally honest with yourself and your team.

Here are some common mixed signals and what to make of them:

- High Engagement, Low Payment: Users love your free tier but won't upgrade. This suggests you’ve built a useful tool, but the value isn't strong enough to open wallets. You might have a great feature, but not a business.

- Positive Feedback, No Referrals: Customers say they love the product in surveys but never recommend it to others. True PMF creates evangelists; when users solve a painful problem, they naturally tell their peers. A lack of word-of-mouth growth is a serious red flag.

- Segment-Specific Success: One small user group is highly active while others are indifferent. This isn't failure—it's a critical clue! It means you may have found PMF for a specific niche. The next step is to figure out if that niche is large enough to build a real business around.

Pivoting vs. Persisting

Recognizing these patterns helps you make one of the toughest leadership decisions: whether to pivot or persist. If your data shows strong retention and growing organic interest within a specific customer segment, persistence is key. Double down on what's working for that group.

However, if your metrics are flat across the board and users would feel little to no pain if your product vanished, it’s a strong sign that a pivot is necessary. Making this call requires objectivity, a critical skill we cover in our guide on product risk management. Ultimately, product-market fit validation isn't about proving your initial idea was right; it’s about finding the right idea that the market pulls from you.

Maintaining PMF as You Scale and Evolve

Finding product-market fit isn't like crossing a finish line; it’s more like starting a long-term relationship with your market—one that needs constant work. As your company grows, that relationship changes. The scrappy strategies that got you your initial footing won't be enough to keep it as you add features, expand your team, and explore new territories. The real challenge is keeping that spark alive as your teams get bigger and more distant from the front lines.

Growth introduces complexity. A product team of five can stay close to customers almost by default. A team of fifty? Not so much. This is where your product market fit validation process has to evolve from a one-off project into an ongoing, embedded system. It becomes less about grand discoveries and more about building sensitive, automated feedback loops that act as an early warning system. These systems help you spot when your fit might be weakening, long before it shows up as a drop in revenue.

Adapting Validation for New Horizons

As you scale, you'll naturally look toward new customer segments or geographic markets. Each new frontier requires its own focused product market fit validation. A "must-have" feature for your US-based enterprise clients might be totally irrelevant to mid-market European customers. Treating them as a single group is a recipe for a failed expansion.

To handle this, create a repeatable playbook for entering new segments:

- Isolate and Hypothesize: Start with a clear, specific hypothesis. For example, "We believe our project management tools will solve scheduling conflicts for German manufacturing firms with over 500 employees because of their emphasis on production line efficiency."

- Run a Targeted Test: Don’t just open the floodgates. Run a small, localized pilot. This could even be a "concierge" test where you manually provide a service to confirm the need before you build out and localize the entire product.

- Measure Segment-Specific Metrics: Track retention, NPS, and engagement just for this new group. Compare these numbers against your core, established market. A major drop-off is a clear signal of a fit problem.

Building a Culture of Continuous Validation

Ultimately, maintaining PMF while you evolve comes down to your company culture. It’s about making it clear that market validation is everyone’s job, not just a task for the product team. This means creating transparent dashboards where anyone can see key PMF metrics like churn rate, cohort retention, and customer feedback trends.

To put this in perspective, a 2023 report noted that over 300,000 new businesses were started in the US in a single quarter. In such a competitive environment, the companies that succeed are the ones that build a culture of constant market awareness. They empower their teams to ask tough questions and stay connected to customer realities. This ensures the product evolves with the market, not away from it. This cultural commitment is the most durable way to protect your hard-won position.

Your PMF Validation Action Plan

Knowing what to do is one thing, but turning that knowledge into a concrete plan is what separates stalled products from successful ones. This is where we shift from theory to a hands-on, executable roadmap. The idea isn't to just run a few scattered tests, but to create a repeatable process that gives you a genuine edge. Let's break down how to build an action plan you can start using today.

Prioritizing Your Validation Activities

You can't test everything at once—your time, budget, and team capacity are all limited. This makes prioritization your most important task. Start by mapping out your biggest assumptions. Ask yourself: which of our core beliefs, if proven wrong, would completely derail our strategy? That’s your starting point.

A great way to manage this is by creating a validation backlog, much like a product backlog. Each item in this backlog is a testable hypothesis. You then rank these hypotheses based on two critical factors: risk and confidence.

- High-Risk, Low-Confidence Assumptions: These are your immediate priorities. They represent the foundational ideas you have the least evidence for. A classic example might be, "We believe our target customers are willing to pay $99/month for our main feature set." This is a huge assumption that needs to be tested early.

- Low-Risk, High-Confidence Assumptions: These can be moved to the back of the line. You might already have strong anecdotal feedback supporting these, so running rigorous tests right away isn't as urgent.

This framework forces you to focus your energy where it truly counts, systematically de-risking your business model with each experiment.

Checklists for Key Validation Stages

To keep your team aligned and ensure nothing critical gets missed, use simple checklists for each major validation phase. This builds accountability and structure into what can otherwise be a chaotic process.

Problem/Solution Fit Checklist:

- Have we conducted 15-20 deep-dive interviews with our ideal customer segment?

- Did we identify a true "hair-on-fire" problem that users rated an 8 or higher (out of 10)?

- Have we built a low-fidelity prototype or mockup to visualize the solution?

- Did interviewees confirm that our proposed solution actually solves their problem?

Product/Market Fit Checklist:

- Have we launched a Minimum Viable Product (MVP) to a specific, targeted segment?

- Did we run the Sean Ellis Test with a group of our most engaged users?

- How did we do? Did we hit or exceed the 40% "very disappointed" benchmark?

- Are our analytics set up to track cohort retention and user engagement over time?

- Can we see our retention curve starting to flatten after the first 2-3 months?

As you build out your own PMF validation plan, it helps to see how these activities fit into a larger project. You can find more on this in our guide to Mastering Your App Development Project Plan, which helps frame validation work within your broader roadmap.

From Plan to Practice

This guide is meant to give you an honest, clear-eyed view of what it really takes to find product-market fit. It's a demanding, and often humbling, journey. But it’s the only proven path to building a product that doesn’t just launch, but endures. The discipline you develop through rigorous product market fit validation is an asset that will pay dividends at every stage of your company’s growth.

Struggling to get your team on the same page or build a growth strategy that delivers? With over 25 years of experience scaling software companies, I work with executives like you to foster data-driven cultures and achieve sustainable results. Learn how my personalized coaching can help you and your team thrive.

Ready to drive more growth & achieve bigger impact?

Leverage my 25+ years of successes and failures to unlock your growth and achieve results you never thought possible.

Get Started